That doctors can peer into the human body without making a single incision once seemed like a miraculous concept. But medical imaging in radiology has come a long way, and the latest artificial intelligence (AI)-driven techniques are going much further: exploiting the massive computing abilities of AI and machine learning to mine body scans for differences that even the human eye can miss.

Imaging in medicine now involves sophisticated ways of analyzing every data point to distinguish disease from health and signal from noise. If the first few decades of radiology were about refining the resolution of the pictures taken of the body, then the next decades will be dedicated to interpreting that data to ensure nothing is overlooked.

Imaging is also evolving from its initial focus—diagnosing medical conditions—to playing an integral part in treatment as well, especially in the area of cancer. Doctors are beginning to lean on imaging to help them monitor tumors and the spread of cancer cells so that they have a better, faster way of knowing if therapies are working. That new role for imaging will transform the types of treatments patients will receive, and vastly improve the information doctors get about how well they’re working, so that they can ultimately make better choices about what treatment options they need.

“In the next five years, we will see functional imaging become part of care,” says Dr. Basak Dogan, associate professor of radiology at University of Texas Southwestern Medical Center. “We don’t see the current standard imaging answering the real clinical questions. But functional techniques will be the answer for patients who want higher precision in their care so they can make better informed decisions.”

Detecting problems earlier

The first hurdle in making the most of what images can offer—whether they are X-rays, computerized tomography (CT) scans, magnetic resonance imaging (MRI), or ultrasounds—is to automate the reading of them as much as possible, which saves radiologists valuable time. Computer-aided algorithms have proven their worth in this area, as massive computing power has made it possible to train computers to distinguish abnormal from normal findings. Software specialists and radiologists have been teaming up for years to come up with these formulas; radiologists feed computer programs their findings on tens of thousands of normal and abnormal images, which teaches the computer to distinguish when images contain things that fall outside of normal parameters. The more images the computer has to compare and learn from, the better it becomes at fine-tuning the distinctions.

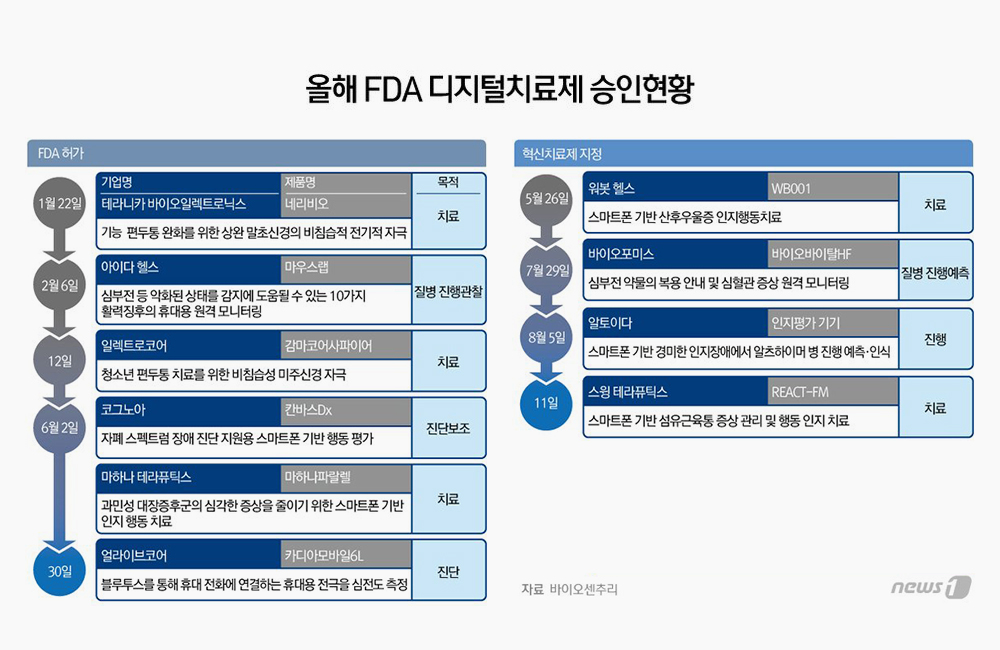

For the U.S. Food and Drug Administration (FDA) to approve an algorithm involving imaging, it must be accurate 80% to 90% of the time. So far, the FDA has approved about 420 of these for various diseases (mostly cancer). The FDA still requires that a human be the ultimate arbiter of what the machine-learning algorithm finds, but such techniques are critical for flagging images that might contain suspicious findings for doctors to review—and ultimately provide faster answers for patients.

At Mass General Brigham, doctors use about 50 such algorithms to help them with patient care, ranging from detecting aneurysms and cancers to spotting embolisms and signs of stroke among emergency-room patients, many of whom will present with general symptoms that these conditions share. About half have been approved by the FDA, and the remaining ones are being tested in patient care.

“The goal is to find things early. In some cases, it may take humans days to find an accurate diagnosis, whereas computers can run without sleep continuously and find those patients who need care right away,” says Dr. Keith Dreyer, chief data science officer and vice chairman of radiology at Mass General Brigham. “If we can use computers to do that, then it gets that patient to treatment much faster.”

Tracking patients more thoroughly

While computer-assisted triaging is the first step in integrating AI-based support in medicine, machine learning is also becoming a powerful way to monitor patients and track even the smallest changes in their conditions. This is especially critical in cancer, where the tedious task of determining whether someone’s tumor is growing, shrinking, or remaining the same is essential for making decisions about how well treatments are working. “We have trouble understanding what is happening to the tumor as patients undergo chemotherapy,” says Dogan. “Our standard imaging techniques unfortunately can’t detect any change until after midway through chemo”—which can be months into the process—“when some kind of shrinkage starts occurring.”

Imaging can be useful in those situations by picking up changes in tumors that aren’t related to their size or anatomy. “In the very early stages of chemotherapy, most of the changes in a tumor are not quite at the level of cell death,” says Dogan. “The changes are related to modifying interactions between the body’s immune cells and cancer cells.” And in many cases, cancer doesn’t shrink in a predictable way from the outside in. Instead, pockets of cancer cells within a tumor may die off, while others continue to thrive, leaving the overall mass more pockmarked, like a moth-eaten sweater. In fact, because some of that cell death is connected to inflammation, the size of the tumor may even increase in some cases, even though that doesn’t necessarily indicate more cancer cell growth. Standard imaging currently can’t distinguish how much of a tumor is still alive and how much is dead.The most commonly used breast-cancer imaging techniques, mammography and ultrasound, are designed instead to pick up anatomical features.

At UT Southwestern, Dogan is testing two ways that imaging can be used to track functional changes in breast cancer patients. In one, using funding from the National Institutes of Health, she is imaging breast cancer patients after one cycle of chemotherapy to pick up slight changes in pressure around the tumor by injecting microbubbles of gas. Ultrasound measures changes in pressure of these bubbles, which tend to accumulate around tumors; growing cancers have more blood vessels to support their expansion, compared to other tissues.

In another study, Dogan is testing optoacoustic imaging, which turns light into sound signals. Lasers are shone on breast tissue, causing cells to oscillate, which creates sound waves that are captured and analyzed. This technique is well suited to detect tumors’ oxygen levels, since cancer cells tend to need more oxygen than normal cells to continue growing. Changes in sound waves can detect which parts of the tumor are still growing, and which are not. “Just by imaging the tumor, we can tell which are most likely to metastasize to the lymph nodes and which are not,” says Dogan. Currently, clinicians can’t tell which cancers will spread to the lymph and which won’t. “It could give us information about how the tumor is going to behave and potentially save patients unnecessary lymph node surgeries that are now part of standard care.”

The technique could also help find early signs of cancer cells that have spread to other parts of the body, well before they show up in visual scans and without the need for invasive biopsies. Focusing on organs to which cancer cells typically spread, such as the bones, liver, and lungs, could give doctors a head start on catching these new deposits of cancer cells.

Spotting unseen abnormalities

With enough data and images, these algorithms could even find aberrations for any condition that no human could detect, says Dreyer. His team is also working on developing an algorithm that measures certain biomarkers in the human body, whether anatomical or functional, so it can flag changes in those metrics that could suggest someone is likely to have a stroke, fracture, heart attack, or some other adverse event. That’s the holy grail of imaging, says Dreyer, and while it’s a few years away, “those are the kinds of things that are going to be transformational in healthcare for AI.”

To get there, it will take tons and tons of data from hundreds of thousands of patients. But the siloed health care systems of the U.S. mean that pooling such information is challenging. Federated learning, in which scientists develop algorithms that are applied to different institutions’ anonymized patient-information databases, is one solution. That way, privacy is maintained and institutions won’t have to jeopardize their secure systems.

If more of those models are validated, through federated learning or otherwise, AI-based imaging could even start to help patients at home. As COVID-19 made self-testing and telehealth more routine, people may eventually be able to get imaging information through portable ultrasounds provided via a smartphone app, for example.

“The real change in health care that is going to happen from AI is that it will deliver a lot of solutions to patients themselves, or before they become patients, so that they can stay healthy,” says Dreyer. That would perhaps be the most potent way to optimize imaging: by empowering patients to learn from and make the most informed decisions possible about protecting their health.