Chat-GPT has gained more than 100 million

users since Microsoft-backed OpenAI launched the AI service five months ago.

People across the globe are using the technology for a multitude of reasons,

including to write high school essays, chat with people on dating apps and

produce cover letters.

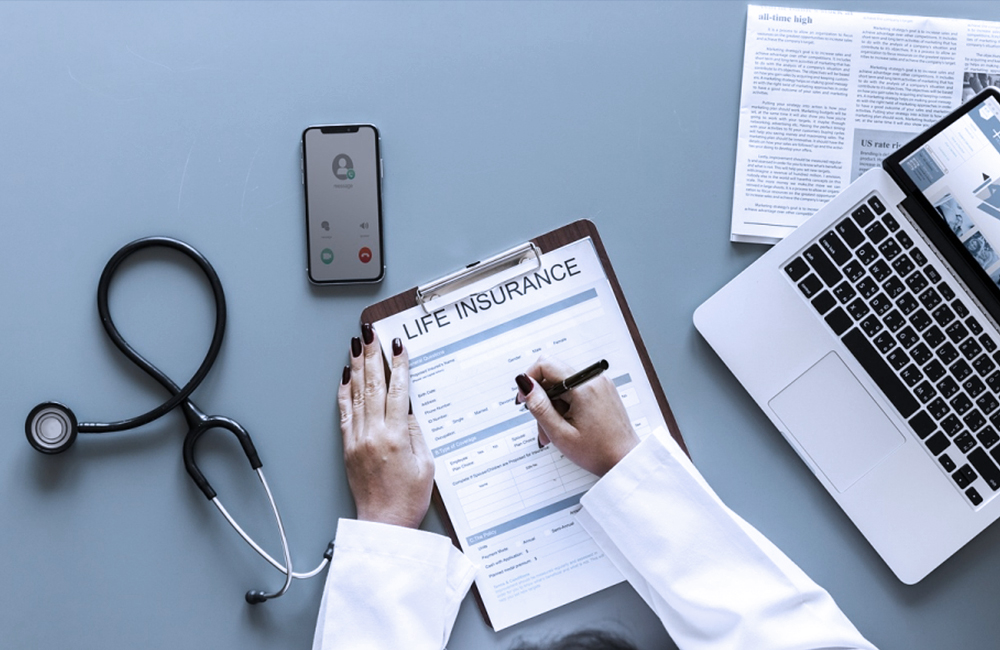

The healthcare sector has been notoriously

slow to adopt new technologies in the past, but Chat-GPT has already begun to

enter the field. For example, healthcare software giant Epic recently announced

that it will integrate GPT-4, the latest version of the AI model, into its

electronic health record.

So how should healthcare leaders feel about

ChatGPT and its entrance into the sector? During a Tuesday keynote session at

the HIMSS conference in Chicago, technology experts agreed that the AI model is

exciting but definitely needs guardrails as it becomes implemented into

healthcare settings.

Healthcare leaders are already beginning to

explore potential use cases for ChatGPT, such as assisting with clinical

notetaking and generating hypothetical patient questions to which medical

students can respond.

Panelist Peter Lee, Microsoft’s corporate

vice president for research and incubation, said his company didn’t expect to

see this level of adoption happen so quickly. They thought the tool would have

about 1 million users, he said.

Lee urged the healthcare leaders in the

room to familiarize themselves with ChatGPT so they can make informed decisions

about “whether this technology is appropriate for use at all, and if it is, in

what circumstances.”

He added that there are “tremendous

opportunities here, but there are also significant risks — and risks that we

probably won’t even know about yet.”

Fellow panelist Reid Blackman — CEO of

Virtue Consultants, which provides advisory services for AI ethics — pointed

out that the general public’s understanding of how ChatGPT works is quite poor.

Most people think they are using an AI

model that can perform deliberation, Blackman said. This means most users think

that ChatGPT is producing accurate content and that the tool can provide

reasoning about how it came to its conclusions. But ChatGPT wasn’t designed to have a concept of truth or correctness — its objective

function is to be convincing. It’s meant to sound correct, not be correct.

“It’s a word predictor, not a deliberator,”

Blackman declared.

AI’s risks usually aren’t generic, but

rather use case-specific, he pointed out. Blackman encouraged healthcare

leaders to develop a way of systematically identifying the ethical risks for

particular use cases, as well as begin assessing appropriate risk mitigation strategies

sooner rather than later.

Blackman wasn’t alone in his wariness. One

of the panelists — Kay Firth-Butterfield, CEO of the Center for Trustworthy

Technology — was among the more than 27,500 leaders who signed an open letter

last month calling for an immediate pause for at least six months on the

training of AI systems more powerful than GPT-4. Elon Musk and Steve Wozniak

were among some of the other tech leaders who signed the letter.

Firth-Butterfield raised some ethical and

legal questions: Is the data that ChatGPT is trained on inclusive? Doesn’t this

advancement leave out the three billion people across the globe without

internet access? Who gets sued if something goes wrong?

The panelists agreed that these are all

important questions that don’t really have conclusive answers right now. As AI

continues to evolve at a rapid pace, they said that the healthcare sector has

to establish an accountability framework for how it’s going to address the

risks of new technologies like ChatGPT moving forward.