From left, Stephanie

Fiore, Director of Digital Health at Elevance Health, Farzana Dudhwala, AI

Governance and Policy Manager at Meta, Laura Caroli, Accredited European

Parliamentary Assistant, and Elham Tabassi, a senior research scientist at

NIST, speak on new rules and tools in artificial intelligence (AI) development

in Las Vegas, Thursday. (Credit: CTA)

CES

2023 closed day one with a discussion panel on the new policy changes that will

be implemented this year to help guide artificial intelligence (AI) innovations

in a fair, accurate, and safe manner, including specific impacts on the

healthcare industry at the Las Vegas Convention Center on Thursday, local time.

The

panel featured an all-female line-up in tech with Farzana Dudhwala, Meta’s AI

Governance and Policy Manager, Elham Tabassi, NIST Senior Research Scientist,

Laura Caroli, Accredited European Parliamentary Assistant and Stephanie Fiore,

Director of Digital Health at Elevance Health.

The

session highlighted some of the changes coming to the U.S. and EU in terms of

policies to bring a more human-centered approach to AI innovations so that the

full potential of AI can be safely enjoyed using a risk-based analysis.

Providing

the EU perspective, Caroli explained the EU AI Act, which is scheduled to be

approved by the end of the year, will be applicable for two years.

Tabassi

spoke about the National Institute of Science and Technology's (NIST’s) AI risk

management framework (RMF) document which is scheduled to be published later

this month.

The

document will provide flexible but measured guidance so that AI technologies

can become more accurate, reliable, and trustworthy, she said.

Shedding

some light on AI in the health sector, Fiore explained, AI contributes to

modernizing electronic health records, enabling hospital system

interoperability via the FHIR-based application programming interfaces,

detecting eye disease from retina scans at an earlier stage and alleviating

administrative tasks at physician offices.

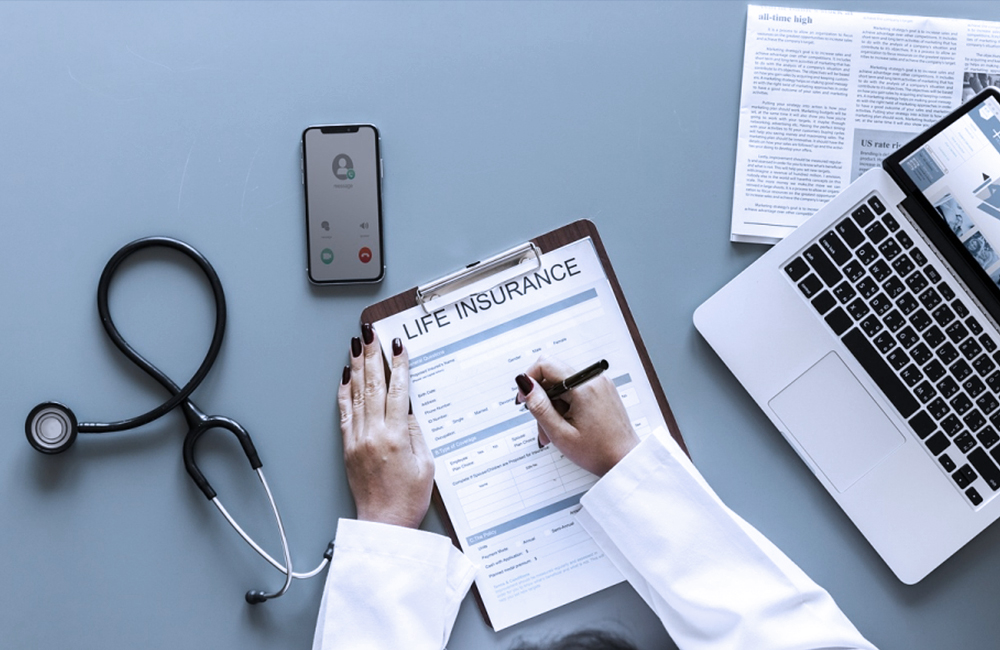

She

also went on to explain Health Insurance Portability and Accountability Act

(HIPPA)’s role in providing the minimum necessary data standard to protect and

secure personal health information.

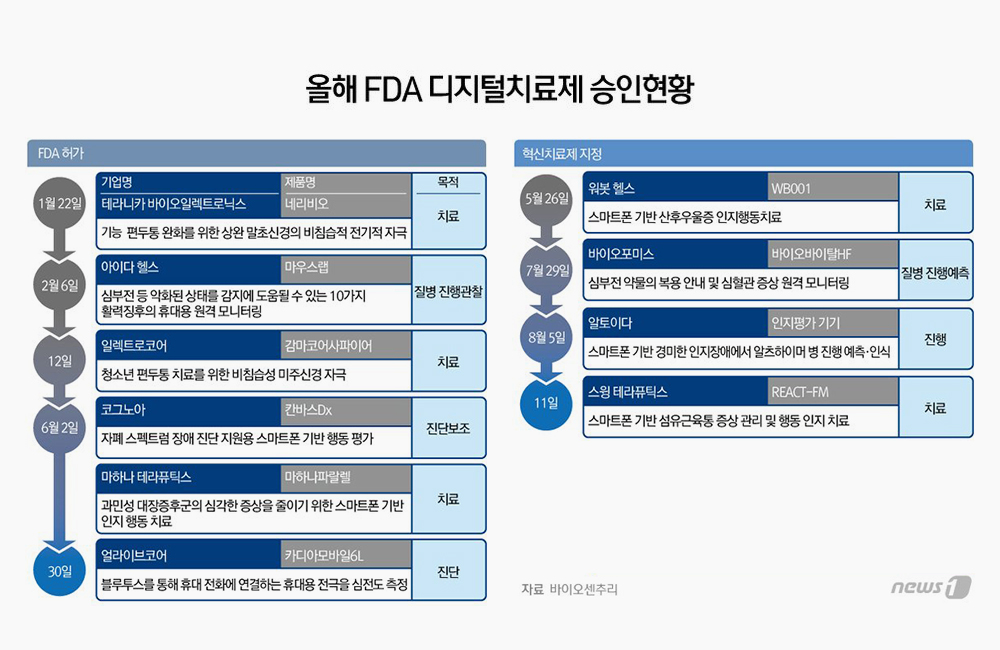

For

medical devices, she referenced the FDA’s digital health policy navigator tool

as a means to determine whether your product's software functions are

potentially the focus of the FDA's oversight.

With

Meta providing a more global industry perspective, Dudhwala stressed the

importance of involving all stakeholders in the policy-making process to make

it more applicable to industry-specific needs to bring about more effective

laws.

Along

with the risk-based system approach, Caroli also mentioned prohibited uses of

AI in the EU which will soon take effect. This includes the social scoring

system used in China and the real-time remote biometric identification system

in publicly accessible spaces with exceptions for law enforcement.

In

this regard, Fiore also advocates for the risk-based approach in healthcare as

many things are life and death matters and should be heavily regulated but also

admitted that simpler administrative tasks would not require this.

However,

Caroli quickly acknowledged the difficulty in classifying some health-based

risks. “Fundamental human rights are not something we can easily put on a

scale. It’s either you infringe on them or you don’t. It’s not an easy task but

we are trying our best to get it right,” she said.

Pivoting

to the topic of fairness and inclusivity in AI innovations, Meta’s Dudhwala

responded, “Sometimes datasets lack diversity and therefore do not accurately

represent the different groups resulting in stereotypes and biases in the AI

systems we develop.”

On

the flip side, she also noted that there are some instances where data

represents society too well inclusive of all the structural inequalities.

Therefore,

she said, “AI developers have a role to play in overcoming these societal

inequalities.”

On

that note, she mentioned a method called “secure multiparty computation” to

help alleviate biases found within the data while preserving the privacy

concerns of data exchange.

Regarding

the use of standardization supporting AI rule compliance in the healthcare sector,

Fiore said, “Standards for healthcare are very much welcomed and should ensure

they align with but don’t overlap with existing policies, especially as

individual States are also making their own policies."

Having standards is crucial to reduce confusion and ensure the best outcomes for the development of AI in healthcare going forward, she said.